Remember when automatic captions on YouTube made us all go wow?

Well, not so much at first. They were quite inaccurate to begin with, but they’ve gotten much better in the past few years. So has speech-to-text technology, in general, which is why automatic speech recognition (ASR) is everywhere now.

Live captions on YouTube. Voice typing and dictation apps on smartphones. Virtual assistants — Siri, Google Assistant, Alexa... Cortana, too. Automatic captions on video calls via Zoom, Google Meet or Microsoft Teams. All good stuff that’s getting better every day. But wow? Again, not really. We’ve raised our bar for “wow” and none of them are always accurate.

Automatic Speech Recognition is good, but it’s not perfect. Not yet.

You can guess a few reasons for these errors. Accents. Rate of speech. Acronyms. There’s more, but we’ll need to zoom back a bit to understand how we measure ASR accuracy.

Be it by humans or by a machine, one common metric to measure speech-to-text accuracy is the Word Error Rate (WER). It’s not the only one, but it’s the one everyone talks about.

What is Word Error Rate (WER)?

Put simply, WER is the ratio of errors in a transcript to the total words spoken. A lower WER in speech-to-text means better accuracy in recognizing speech. For example, a 20% WER means the transcript is 80% accurate.

Out in the real world, both the WER formula and its applicability are a little more nuanced.

Word Error Rate = (Substitutions + Insertions + Deletions) / Number of Words Spoken

Our friends at Rev.ai have a great explainer on this, but here’s the gist:

- You read a paragraph out loud. It has X number of words.

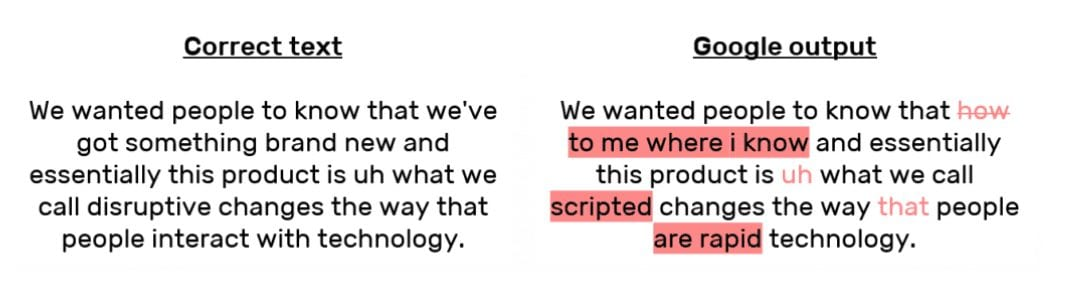

- An ASR system hears you and outputs a string of text.

- There’s some misspellings that are substituted — S.

- Sometimes the system inserts words that weren’t said — I.

- And some words are deleted, as in not picked up at all — D.

- To calculate WER, you add up the numbers S, I and D, and divide their sum by X.

Calculating Word Error Rate via Rev.ai

As for applicability, WER is not the one-ring-to-rule-them-all measure of ASR accuracy.

- It does not account for why the errors happen. More on this in a bit.

- ASR systems with relatively high WER can and do output data that is readable and useful in specific contexts.

For example, Amazon’s ASR system has a higher WER than Google’s but Alexa works just fine in most scenarios it was designed for. - Finally, as researchers at Microsoft found all the way back in 2003, true understanding of spoken language relies on more than just high word recognition accuracy.

Yet WER continues to be a good barometer to compare different ASR systems or evaluate improvements within one system. It’s also easy to understand, right?

What causes speech-to-text transcription errors?

There’s a whole host of reasons, but here are 5 common variables that cause transcription errors and affect WER in modern ASR systems.

- Accents and variations in rate of speech

- Homophones, homographs and homonyms

- Crosstalk aka overlapping dialogue

- Audio quality and background noise

- Acronyms and industry-specific jargon

1. Accents and variations in rate of speech

Everyone has an accent, even native English speakers. We speak differently, you and I. At different speeds, too. Think a New York City accent vs a Southern drawl. Or British, Scottish, Irish, Indian, Australian accents... it’s a beautiful mess, right?

Yet a little exposure and a little effort is all it takes for humans to understand minor variations in speech, pronunciation and rate of speech. So we know what sounds like “lemme” means “let me” or that “caw-fee” means “coffee.” We understand when someone with a distinctive Cockney accent says “it’s a good die,” they mean “it’s a good day.” Which is kinda fun when you change accents mid-way and say, “It’s a good die! Lemme grab some caw-fee.”

Not so much fun for ASR systems though, not without contextual training or a custom Natural Language Understanding (NLU) engine for a specific accent. Most ASR systems aren’t trained on every accent under the sun, so they have high WER due to varying accents and rates of speech in conversations.

2. Homophones, homographs and homonyms

I know it’s a tongue-twister, they all sound like the same thing and I get them mixed up too. So I looked it up and here’s what Merriam-Webster says, “Homophones are words that sound the same but are different in meaning or spelling. Homographs are spelled the same, but differ in meaning or pronunciation. Homonyms can be either or even both.”

Here are some examples:

- Homophones (sound the same) — To, too, and two. Or berth and birth.

- Homographs (spelled the same) — A bow of a ship vs a bow that shoots arrows. A fine example of vs a fine for speeding.

- Homonyms — all of the above?

The tricky part is that they sound like they mean the same thing, so I’m going with homonyms.

Puns aside, identifying and understanding homonyms requires context, which is easy for humans. Again, not so for ASR systems and there will be substitutions, so a higher error rate.

3. Crosstalk aka overlapping dialogue

We speak over each other more often than we think. Yes, some apologize immediately and pause to let the other speaker say what’s on their mind, but crosstalk is not just that. It’s almost unavoidable in day-to-day conversations, especially when it’s not face-to-face.

Think back to the last time someone said something you agreed with and you nodded along while they were saying it. Now think about how we do this on a phone call.

We say “okay” or “yes” or “right” or “I agree,” but we often say it at the tail-end of the other speaker’s statement. Sometimes, we wait for them to complete the sentence. Sometimes, we nod verbally before they’re finished. And the speaker carries on.

Meanwhile, the third human on the call is following along just fine. They know who to listen to, which voice to prioritize and what’s going on.

Not ASR systems. They are smart, but not human. Depending on how an ASR system is trained and configured, it may handle crosstalk by prioritizing one speaker and omitting the other speaker’s words. Which, of course, increases the Word Error Rate.

4. Audio quality and background noise

Working from home or an open office, we know to step into a quiet space for business conversations. We need a high-speed internet connection — for all those Zoom meetings. This ensures our collaborators can hear us, but it’s not always possible, is it?

Sometimes, we call from a phone line. Sometimes, we use speakerphones. Sometimes, the Wi-Fi network acts up. Sometimes, there are kids screaming next door.

“Sorry, could you repeat that?” ASR systems can’t say this, but they try their best to transcribe what was said anyway — with errors.

5. Acronyms and industry-specific jargon

It makes sense if you know. If not, you might go, “Is this English?”

- TIL what YMMV means.

- Lower CAC and higher ARR FTW!

- AFAIK these FAB will help you GTD. So LGTM!

- From TOFU to BOFU, we use BANT to turn an MQL to an SQL.

That right there is why human transcriptionists are often subject matter experts in their field. It’s simply impossible to know every acronym and industry-specific jargon used day-to-day. We “get” some of them and ignore the rest.

The same goes for ASR systems. Usually trained on datasets of good old English, they struggle to parse and/or make sense of sentences like the ones above. So, like humans often do with “misheard lyrics,” the output is wildly different from what the input.

But how accurate are modern ASR systems?

Despite these challenges (and a few more I ran out of room to talk about), ASR systems have improved exponentially over the last few years. Yeah, live captions on YouTube are still hit or miss due to the sheer variety of content up there, but speech recognition systems are way better than they used to be.

According to data on Statista, ASR systems developed by Google, Microsoft and Amazon have a WER of 15.82%, 16.51% and 18.42% respectively.

Another paper by a group of researchers on PubMed Central benchmarked popular Automatic Speech Recognition systems on various accuracy metrics. Across 3 different speakers, Google’s WER was 20.63% compared to 38.1% for IBM.

In our own speech-to-text accuracy testing, we found Amazon’s ASR had a WER of 22.05% with Google’s Speech-to-Text API performing just a little bit better at 21.49%. Clari Copilot (we use a combination of multiple third-party Automatic Speech Recognition systems with our own purpose-built Natural Language Processing algorithms) was the most accurate with a 14.75% WER.

Yay! All that work on fine-tuning Clari Copilot’s ASR system seems to have paid off, with > 85% transcription accuracy.

What’s next, Clari Copilot?

Higher than 85% accuracy on long-form multi-speaker transcription is great, but we’re ambitious. Each cent of a percent in transcription accuracy counts, it means more actionable intelligence for sales, marketing and product teams. So we’re building custom vocabulary rules, conversation hints and in-line transcription edits to make Clari Copilot a little more accurate everyday.